| Uploader: | Tinkerbell9876 |

| Date Added: | 17.02.2016 |

| File Size: | 36.76 Mb |

| Operating Systems: | Windows NT/2000/XP/2003/2003/7/8/10 MacOS 10/X |

| Downloads: | 25058 |

| Price: | Free* [*Free Regsitration Required] |

Tutorial: Use the Amazon SageMaker Python SDK to Train AutoML Models with Autopilot - The New Stack

import boto3 client = boto3. client ('sagemaker') These are the available methods: Amazon SageMaker uses AWS Security Token Service to download model artifacts from the S3 path you provided. In File input mode, Amazon SageMaker downloads the training data from Amazon S3 to the storage volume that is attached to the training instance and. Python – Download & Upload Files in Amazon S3 using Boto3. In this blog, we’re going to cover how you can use the Boto3 AWS SDK (software development kit) to download and upload objects to and from your Amazon S3 blogger.com those of you that aren’t familiar with Boto, it’s the primary Python SDK used to interact with Amazon’s APIs. download_fileobj(Bucket, Key, Fileobj, ExtraArgs=None, Callback=None, Config=None)¶ Download an object from S3 to a file-like object. The file-like object must be in binary mode. This is a managed transfer which will perform a multipart download in multiple threads if necessary. Usage.

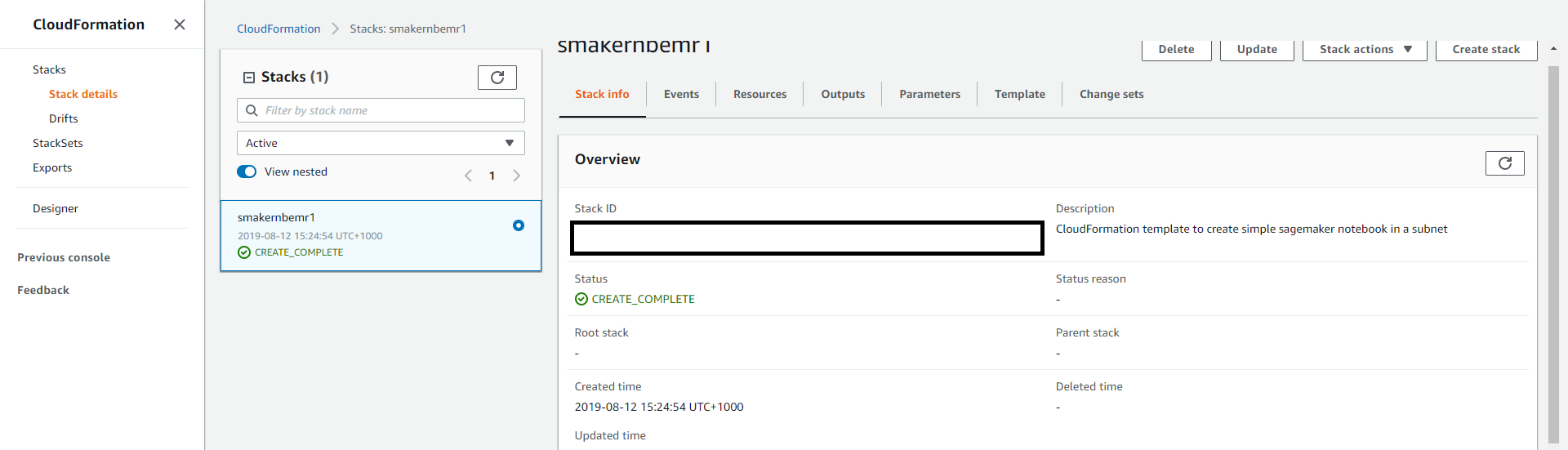

Boto3 download file to sagemaker

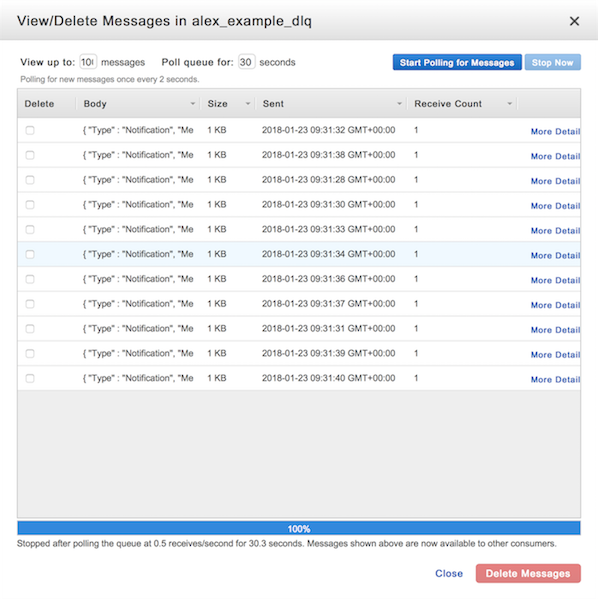

After you deploy a model into production using Amazon SageMaker hosting services, your client applications use this API to get inferences from the model hosted at the specified endpoint.

Amazon SageMaker might add additional headers. You should not rely on the behavior of headers outside those enumerated in the request syntax. A customer's model containers must respond to requests within 60 seconds. If your model is going to take seconds of processing time, boto3 download file to sagemaker, the SDK socket timeout should be set to be 70 seconds.

Endpoints are scoped to an individual account, and are not public. The name of the endpoint that you specified when you created the endpoint using the CreateEndpoint API. Provides input data, in the format specified in the ContentType request header. Amazon SageMaker passes all of the data in the body to the model.

For information about the format of the request body, see Common Data Formats—Inference. Body StreamingBody boto3 download file to sagemaker For information about the format of the response body, see Common Data Formats—Inference. Provides additional information in the response about the inference returned by a model hosted at an Amazon SageMaker endpoint. The information is an opaque value that is forwarded verbatim.

You could use this value, for example, to return an ID received in the CustomAttributes header of a request or other metadata that a service endpoint was programmed to produce.

If the customer wants the custom attribute returned, the model must set the custom attribute to be included on the way back, boto3 download file to sagemaker. Navigation index modules next previous Boto 3 Docs 1. Boto 3 Docs 1. Note Endpoints are scoped to an individual account, and are not public.

Created using Sphinx.

How to Upload files to AWS S3 using Python and Boto3

, time: 12:59Boto3 download file to sagemaker

Feb 28, · In the last tutorial, we have seen how to use Amazon SageMaker Studio to create models through Autopilot.. In this installment, we will take a closer look at the Python SDK to script an end-to-end workflow to train and deploy a model. We will use batch inferencing and store the output in . Mar 10, · Amazon SageMaker Examples Amazon SageMaker Pre-Built Framework Containers and the Python SDK Pre-Built Deep Learning Framework Containers. These examples focus on the Amazon SageMaker Python SDK which allows you to write idiomatic TensorFlow or MXNet and then train or host in pre-built containers. import boto3 client = boto3. client ('sagemaker') These are the available methods: either FILE or PIPE. In FILE mode, Amazon SageMaker copies the data from the input source onto the local Amazon Elastic Block Store (Amazon EBS) volumes before starting your training algorithm. To download the data from Amazon Simple Storage Service.

No comments:

Post a Comment